From Concept to Launch in 50 Days—Building SiteReviewDesk.ai

Leaving the corporate world behind is both exhilarating and daunting. In late June 2024, I made the hard decision to step away from my role as an Engineering Leader at Cisco. My goal? To dive headfirst and hands-on into the world of artificial intelligence and independently build a SaaS product from scratch.This is the story of how I brought sitereviewdesk.ai to life in just 50 days.I share a sprint retrospective towards the bottom of this blog.Share on: LinkedIn

The Need for Speed: Shifting from Corporate to Lean Execution

In the corporate environment, I found myself spending 80% or more of my time on meetings, status updates, and endless planning sessions. While coordination is essential in large organizations as a leader, it often leaves little room for actual personal tangible execution. I craved the agility of immediate decision-making and the thrill of rapid development.I aimed to invert my daily ratio to an extreme of 90% execution and 10% planning. This approach was inspired by the military's 2/3rd rule: spend one-third of the time planning and allocate the remaining two-thirds to execution. As an independent developer, this was not just possible—it was imperative. My challenge to myself and goal was to maximize learning and building with what I had left of summer 2024. I had planned at the time to re-evaluate in late September on next-steps for me -- so that was my personal "deadline".

Hands-On Learning: The Best Teacher

While I began with some courses from DeepLearning.ai, I have always preferred and know myself that I resonated more with hands-on application. That always seemed to stick better with me as I get into my 3rd decade of my career. For me at least, building, tinkering, and even stumbling along the way provided insights that no lecture could offer. I believe that real understanding comes from doing, not just listening and trying to absorb an inventory of theory and knowledge that I might or might not use later.

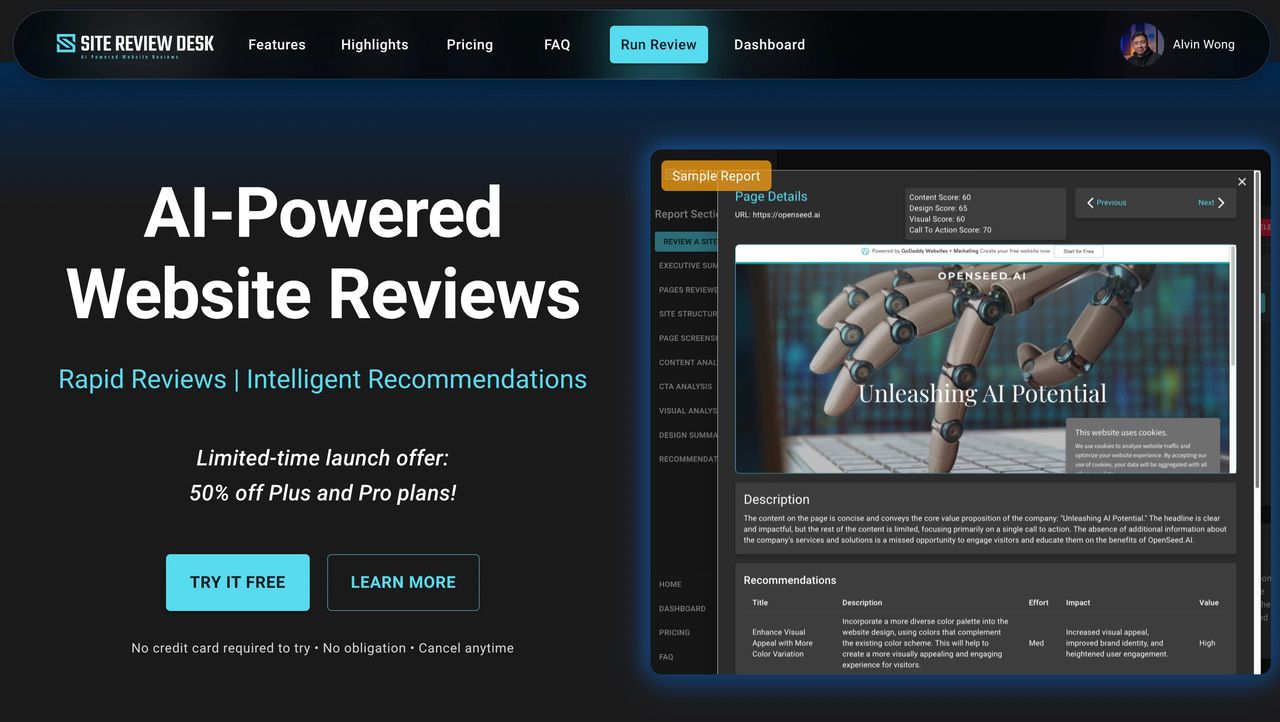

The Birth of SiteReviewDesk.ai

I started jotting down AI-based startup ideas that were both fun and potentially impactful. I wanted to solve real problems, even if they were old issues that could benefit from new solutions. That's when the idea for sitereviewdesk.ai crystallized—a service that automates website reviews using AI, providing deep insights and recommendations for better engagement.

50-Day Retrospective

What Went Well

- Morning Stand-ups: I began each day with a 10-minute personal stand-up meeting. This practice helped me jot-down top priorities, move previous day missed tasks and goals to today if it made sense, and adjust to any new developments overnight quickly.

- Prioritizing Impact: Focusing on big-impact tasks over minor distractions kept the project on track. It’s easy to get lost in the weeds; staying focused on the core functionality was key.

- Daily Commits: Ending each day with a final commit to the main branch created a satisfying sense of progress and ensured that nothing was left hanging. Each day's batch of edits and rabbit holes can get gnarly, but just like a relationship with a spouse -- try to never go to bed upset or leaving things messy :).

- Establishing an AI Project Masterplan.md: Something I picked up from Code with Brandon's template here was invaluable as a way to build and maintain a sort of master project plan that you can maintain and give to any LLM for context on your project. This is so you don't have to re-explain to LLMs over and over again what exactly you're doing and the context. I just put this file in my Anthropic project file cache for example, or kept pointing to it in Cursor/VSCode for the LLMs to read first as a primer before answering and working on my code.

- Optimizing AI Tools: Making it a good habit to keep updating these system/agent prompt engineering and context documents frequently was good hygiene and significantly improved the efficiency using AI development tools. Files like the masterplan.md file, a db schema description file, project file folder structure explainer and README.md for the repo were key. This all helped reduced the time spent on iterative messaging the LLMs and increased the quality of their outputs.

- Choosing the Right AI Tools: I found that Cursor outperformed VS Code with the Continue extension for my needs. Additionally, Ollama was fantastic for experimenting with different models locally without worrying about API costs. During development I probably did 80% of LLM API calls using Ollama (Llama 3.1) and 20% on production paid models Gemini, GPT 4O, Sonnet 3.5 etc..

Challenges Faced

- Tool Limitations: While using Anthropic's Project feature, I encountered issues with updating files. The inability to replace files with the same name led to confusion about which version the AI was referencing. I notified Anthropic about this key functionality miss btw. Maybe others have too!

- Platform Complexity: Running everything on Google Firebase and GCP was more convoluted than expected. The separate admin consoles and scattered documentation made navigation and troubleshooting time-consuming.

- Documentation Woes: Google's documentation was less user-friendly than I had hoped. I often resorted to using language models to find answers, which isn't ideal when you're deep in development.

Lessons Learned

- Avoid Manual Copy-Pasting: Early on, I wasted time manually transferring code snippets from AI chat windows. F**k that -- don't be like me and do that. Switching to Cursor streamlined this process, integrating AI suggestions directly into my codebase. That's one of the things they forked and implemented into their core editor with better fuzzy logic to inject lines of code more accurately. Continue.dev with the limitations of being a VS Code extension didn't do as smooth of a job with this. I haven't tried and done the latest track test with VS Code's latest releases to try and compete with Cursor on this.

- Select the Right Models: General-purpose models like Llama 3.1 didn't meet my coding needs. Specialized fine-tuned models like Codestral and paid models like GPT-4.0, and especially Claude 3.5 Sonnet, provided better coding performance.

- Tool Efficacy Matters: Cursor's intuitive integration of AI suggestions into live code made it superior to alternatives like Continue.dev, which was hit-or-miss in its effectiveness. (But I did miss the "/<custom_command>" function from Continue for common prompts you want to use with the LLM window – Cursor should add that in!)

Reflecting on the Journey

Looking back, the experience was everything I hoped it would be. Of course, there were lots of expected challenges and learnings, but they were just minor setbacks and actually valuable lessons that contributed to both personal and professional growth. While, I am certain I could have spent more time on upfront market research with this particular product, I believe that building something meaningful sometimes requires taking that leap of faith.

Moving Forward: What's Next?

As I continue to iterate on sitereviewdesk.ai, I'm diving into the realms of marketing and user acquisition—a whole new world of learning and set of challenges. But that's the beauty of this journey. Every step opens up new avenues for learning and growth.If you're a software engineer looking to pivot, or someone interested in AI and rapid product development, I hope my experiences offer some valuable insights. Let's keep pushing the boundaries of what's possible.Share on: LinkedInThanks for reading! Feel free to share your thoughts or ask any questions in the comments below.